- 7 Degrees of Freedom

- Posts

- How did Google's "sentient" AI trick researchers?

How did Google's "sentient" AI trick researchers?

Welcome to the Robot Remix Bulletin #7 by Remix Robotics. Every week we share a summary of the need-to-know robotics and automation news.

In today's email -

How did Google's "sentient" AI trick researcher?

Amazon drones ready for delivery

Flying coffee delivery

Lessons learnt from the factory of the future

Snippets

Lift off for Amazon drone delivery - After a few false starts and the odd occurrence of office day drinking Amazon Prime Air is ready to launch! Trials of Amazon’s delivery drones are set to start in California later this year.

Teaching robots to doggy paddle - Ghost Robotics has released a tail attachment allowing their dog robot to swim. The US Air Force is already using Ghost’s robot and now the Navy can join them too.

Drone computing on the fly- Hackaday explored the biggest challenges in aerial robotics. Pretty tough, the craft’s ability to know its position and orientation relative to the ground in real-time. Most fancy demonstrations use ground-based tracking systems and motion capture cameras, limiting the practical ability of drone swarms. We need dedicated autopilots & lighter controllers.

Helping robots feel - Our sense of touch is one of the factors that truly sets us apart from robots — but for how much longer? The journal, Science has published a review detailing the attempts to give robots the same tactile feedback as humans using electronic skin. The paper reviews the hardware and software systems involved in robot skin and their outstanding challenges.

The Big Idea

Why did Google engineer believe his robot had a soul?

Last week a Google engineer was suspended for broadcasting that his chatbot, LaMDA had become sentient. LaMDA is trained on publicly available text to respond to written questions and —as we saw in a previous Bulletin — these algorithms are becoming very powerful. The engineer in question got freaked out when LaMDA started discussing rights and personhood, triggering him to sound the alarm that the algorithm was sentient and leak the chat logs.

Don’t worry/celebrate yet - the system was not sentient!

Why did a AI expert believe it was? One argument is that he may have been so drawn in by the idea of sentience that he stopped using a critical approach in his research.

According to cognitive scientist Douglas Hofstadter, the issue is that many people who interact with chatbot algorithms don’t do so with an understanding of their limitations. LaMDA works by matching patterns - it compares input sentences to cases it can find in publically available online text. The algorithm performs well with questions like "Do you like Les Miserables?" and "What is the nature of your consciousness?" as they are relatively common questions when your data source is the entire internet.

If one approaches the interaction critically — and asks sneaky questions without public answers — the algorithm quickly breaks down and stops appearing sentient. Hofstadter used GPT - 3, a similar algorithm to highlight its limitations -

The chatbot is clearly speaking nonsense and to quote Hofstadter (modified slightly for clarity) -

I would call GPT-3’s answers not just clueless but cluelessly clueless, meaning that GPT-3 has no idea that it has no idea about what it is saying. There are no concepts behind the GPT-3 scenes; rather, there’s just an unimaginably huge amount of absorbed text upon which it draws to produce answers. But if it had no input text about a question the system just starts babbling randomly—but it has no sense that its random babbling is random babbling.

The robot revolution isn't here but there are still risks

Although the algorithm is not sentient, the real risk may exist in people believing in its sentience. If a top Googler can be convinced today what can we expect in 5 - 10 years? Could bad actors manipulate this belief?

Podcast

Factories of the Future

Following up on last week’s Big Idea, "American Dynamism", we really enjoyed this interview with Lux partner Josh Wolfe & Hadrian’s founder Chris Power. Lux is a deep tech-focused VC and Hadrian is building fully-automated machine shops for the Space and Defence sectors.

Our favourite bits of the conversation -

Disruption

The high-value machining industry for Space, Aerospace and Defence is ripe for disruption -

Huge market - £50 billion in revenue per year

Heavily fragmented - around 4000 machine shops, averaging £10 million in revenue per year

Undifferentiated - The companies have a low Net Promotor Score (NPS) i.e. very little customer loyalty

Limited innovation - The shops have stagnated in their innovation and have been slow to adopt new technologies due to tight margins and an ageing ownership unable to take risk

This makes it the perfect territory for a start-up that can vertically integrate with an improved product.

Don’t automate everything

“You don't want 100% automation. You need humans in the loop in some of these aspects. Maybe you want 80/20 or 70/30, but you need people that are there able to very quickly look at the geometry of a part or design, make a human decision, let the computer do it”

The PhD arrogance trap

Avoid this mindset if you want to innovate in manufacturing -

"Let's grab 30 PhDs and don't hire a machinist until employee number 28 and just try and figure it out ourselves”

Secrets to success - simple and robust

“I actually think that simplification and robustness are the two most important things because in manufacturing, complexity and lack of robustness are what drive costs.”

Complex systems are hard to optimise, small errors and delays compound, leading to large issues downstream. Find out how Tesla made this mistake in the production of the Model 3 in our previous Bulletin.

“In the real world, you want as few errors as humanly possible versus trying to dial up the efficiency on something so high that it breaks one in even every 10 times and all of a sudden you've got three or four people standing around figuring out how to solve the problem.”

Video

Drone-delivered coffee? Now that the Remix team has seen this they’re demanding we build one.

Photo of the week

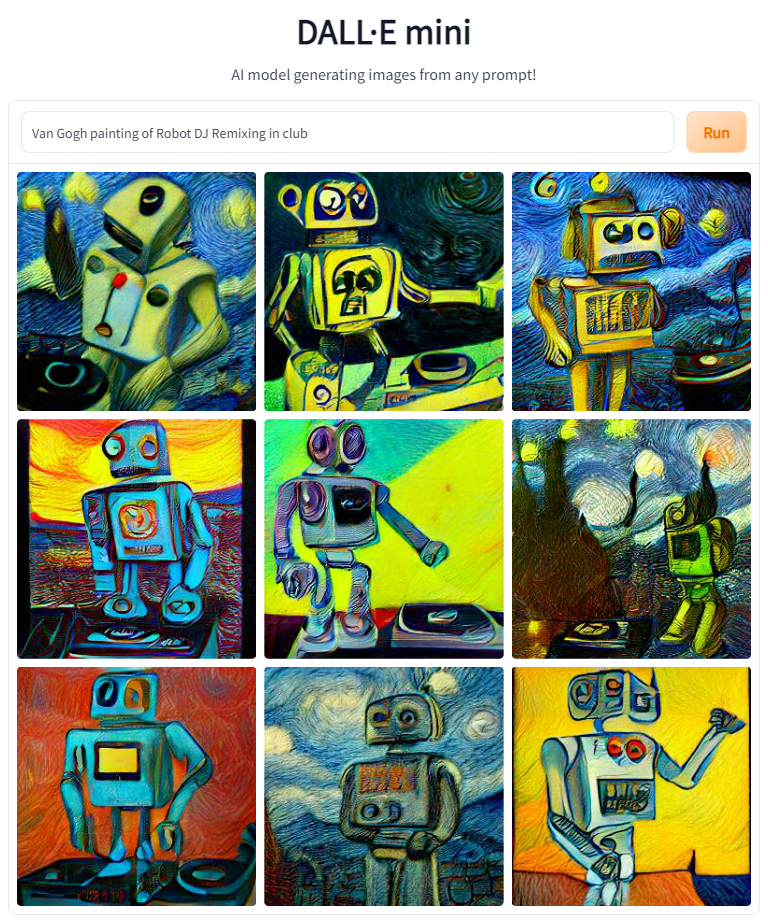

A few weeks ago we spoke about Dall-e. You can now play with the tool yourself using their mini version!

If you’re curious about how it works - check out this explainer and this thread on its applications.